Learning Outcomes:

- To understand the differential impact of AI systems on gender minorities

- To recognise the various factors that contribute to the discrimination against gender minorities by the AI systems

- To understand the need for a feminist ethic for AI

- To understand what would constitute a “feminist” ethic for AI and how to achieve it

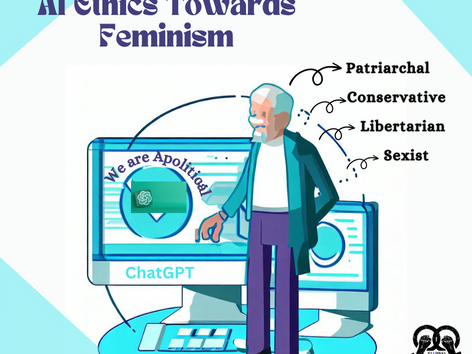

Politicising AI: Human By Design

In simpler words, scientists and engineers have claimed that, unlike humans with humane biases, AI is a machine free of moral judgements. Hence, making it capable of an "impartial" inquiry, which humans are incapable of. However, the veracity of such a claim is highly debatable.

What Makes AI A Feminist Issue?

Imagining A Feminist AI

References

Collett, Clementine, and Sarah Dillon. “AI and Gender: Four Proposals for Future Research.” Cambridge: University of Cambridge, 2019.

Niethammer, Carmen. “Ai Bias Could Put Women’s Lives at Risk – a Challenge for Regulators.” Forbes, March 2, 2020. https://www.forbes.com/sites/carmenniethammer/2020/03/02/ai-bias-could-put-womens-lives-at-riska-challenge-for-regulators/.

Pena, Paz, and Joana Varon. “Oppressive A.I.: Feminist Categories to Understand Its Political Effects ” Not My A.I.” Not my A.I., November 16, 2021. https://notmy.ai/news/oppressive-a-i-feminist-categories-to-understand-its-political-effects/.

Philpott, Wendy. “What Would It Mean to Have Feminist Ai?” Waterloo News, April 10, 2023. https://uwaterloo.ca/news/arts-research/what-would-it-mean-have-feminist-ai.

Riucaurte, Paola. “Artificial Intelligence and the Feminist Decolonial Imagination.” Bot Populi, March 4, 2022. https://botpopuli.net/artificial-intelligence-and-the-feminist-decolonial-imagination/.

Charu Pawar is a 20 year old empath, trying to navigate through the patriarchal world with a feminist heart. Find her debating about “smashing-the-patriachy” over a plate of momos in college lawns, dingy tea shops, and practically everywhere else…